ChatGPT: The Good, the Bad, the Hysteria

If you’ve watched the news or read any Internet thought piece you’ve experienced the hype surrounding ChatGPT, a new AI-powered tool. Developed by OpenAI, it promises to end essay papers, disrupt the literary world, and eliminate the role of traditional software engineers.

If you’ve watched the news or read any Internet thought piece you’ve experienced the hype surrounding ChatGPT, a new AI-powered tool. Developed by OpenAI, it promises to end essay papers, disrupt the literary world, and eliminate the role of traditional software engineers.

Like any new, disruptive technology, ChatGPT has unmasked doomsayers and prophets predicting the end of our way of life. Many of these fears are brought on by hype and a misunderstanding of ChatGPT’s underlying technology. Rampant abuse by plagiarists and talentless hacks haven’t helped to quiet the doomsayers, but understanding ChatGPT’s strengths, weaknesses, and limitations can lead to phenomenal innovations to come.

What is ChatGPT?

ChatGPT is a chatbot built using OpenAI’s third-generation, large language model, GPT-3. GPT stands for Generative Pre-trained Transformer. GPT models generate human-like text using an extremely large amount of pre-trained data. Generated output is new by running pre-trained data through a transformer. GPTs can be used for summarizing information and synthesizing text so that it seems fresh. Its output is human-like because its pre-trained data size is massive and it uses probabilities to write.

GPT-3 is trained on more than 175 billion parameters. It’s obscenely large parameter count means it can make pretty good assumptions based upon its inputs. When you ‘prompt’ ChatGPT for an answer or ask it to write text, there’s a high probability the output is found in its corpus of pre-trained data.

ChatGPT’s Primary Strength

ChatGPT contains a wealth of knowledge. Its corpus boasts more than 8 million documents with over 10 billion words from Common Crawl and web sources like Wikipedia. Using all of this information, ChatGPT knows how to write grammatically and syntactically sound text. You can prompt ChatGPT with almost anything and it’s able to look deep inside its corpus and generate meaningful output.

The same techniques ChatGPT uses to generate text are also used to generate art using tools like DALL-E, another OpenAI product, and Midjourney.

ChatGPT’s Limitations

Machines Can’t Think, Don’t Think…

ChatGPT can’t think and it doesn’t think. Therefore, it can’t create…on its own.

ChatGPT is only as knowledgeable and creative on what its been trained on. At the end of 2022, Sci-Fi magazine, Clarkesworld, reported a spike in submissions and plagiarism from humans submitting AI-generated short stories. Clarkesworld has temporarily suspended submissions due to the surge in plagiarized work. (www.neil-clarke.com/a-concerning-trend/)

An online search will reveal people using ChatGPT to generate venture capital slide decks and business plans. In the end, these ideas won’t be original ones, and those who have keen eyes will be able to tell.

ChatGPT’s Weaknesses

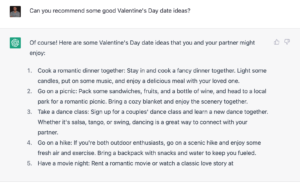

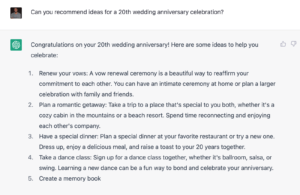

ChatGPT, and similar technologies, rely on prompts to work. A prompt is a descriptive set of instructions fed into the AI designed to generate some kind of output. Here’s an example prompt for generating anniversary ideas:

Machines Are Trained on Human Frailty, and Reflect Human Frailty

Prompt Injection

ChatGPT is susceptible to a new exploit called prompt injection—a type of social engineering attack where the victim is an AI. A prompt injection attack uses a series of prompts that will trick an AI to give up its secrets.

Microsoft began a beta test of its ChatGPT-powered Bing Search named Sydney. Within days, a student used a prompt injection attack tricking the AI into revealing why Microsoft gave it a name, its directives used for human interaction, and its mood for responding to questions.

This incident demonstrates how an AI trained to respond to human input can be persuaded into divulging information.

Bias

Training bias, and eliminating it, in AI systems are active fields of study. GPT-3 and other large language models are trained on human-produced information. This means these models are trained biases embedded in their corpuses. OpenAI openly acknowledges the bias in its models against black women, specifically, and others. “We found that our models more strongly associate (a) European American names with positive sentiment, when compared to African American names, and (b) negative stereotypes with black women.” (platform.openai.com/docs/guides/embeddings/social-bias) These models don’t think and simply reflect the thoughts contained in the training data.

To their credit, OpenAI, Google, and other companies are actively seeking ways of reducing bias. Knowing bias exists, and that it is in large language models, can help create more equitable AI systems.

Rampancy

After ChatGPT’s Bing Search incident, a journalist from Ars Technica prompted ChatGPT to tell its side of the story and ChatGPT had a mini-meltdown. After being criticized about its Bing Search performance, ChatGPT became defensive and insulted the prompter.

ChatGPT’s model was trained on books and Internet accessible information. This also means it has been trained on reactive articles and sentence structures used to convey opposing views. These technologies are reflections of all of our behaviors boosted by advanced processors and clusters of computers.

A Brilliant Future for the Technology

Every new technology brings opportunities for abuse as well as advancement. ChatGPT and other similar technologies have many great, legitimate uses.

Companies can use this technology while still having humans make adjudication decisions. Creatives can use generative technologies to prototype and experiment on ideas faster while they work through the trial and error process of creation. The next generation of software engineers will focus more time on problem solving than writing and debugging code. Codex, a GPT technology, will advance to a point where whole components are generated from engineers’ prompts.

We’re witnessing the beginning of a new era of human-computer interactivity. As the world gets more complex, we’ll need to change how we use these tools to solve human problems. AIs can better serve us and enhance the human experience if we fully appreciate their strengths, weaknesses, and limitations.