GPT-4o Provides a Glimpse of What’s Possible

There are models, and then there are models. And then, there’s GPT-4o. OpenAI has the Internet abuzz about its latest release of GPT-4. The ‘o’ in their latest release stands for ‘omni’, as in ‘all’. In that OpenAI’s latest multimodal AI model can interpret data across ‘all’ modes. OpenAI is claiming GPT-4o can reason across text, still images, audio, and video. Perhaps, omni is short for omnipotent.

There are models, and then there are models. And then, there’s GPT-4o. OpenAI has the Internet abuzz about its latest release of GPT-4. The ‘o’ in their latest release stands for ‘omni’, as in ‘all’. In that OpenAI’s latest multimodal AI model can interpret data across ‘all’ modes. OpenAI is claiming GPT-4o can reason across text, still images, audio, and video. Perhaps, omni is short for omnipotent.

Everything began to change four years ago when GPT-3 was released in 2020. The public wouldn’t catch on to GPT-3’s near human like text prowess for another two years until people started interacting with ChatGPT. GPT-3’s poetry and prose was phenomenal for an AI, but it didn’t fool many consumers reading AI generated news articles, nor did it persuade professors into giving students an A+ on college essays.

ChatGPT triggered a wave of Large Language Model development and investment. There are over 26,000 AI companies around the world that have attracted more than $330 billion in investment. ChatGPT’s popularity created a multimodal AI war. Google launched Gemini five months after ChatGPT’s debut. Amazon has made Anthropic’s models available via AWS, and Microsoft is embedding OpenAI technologies into their products.

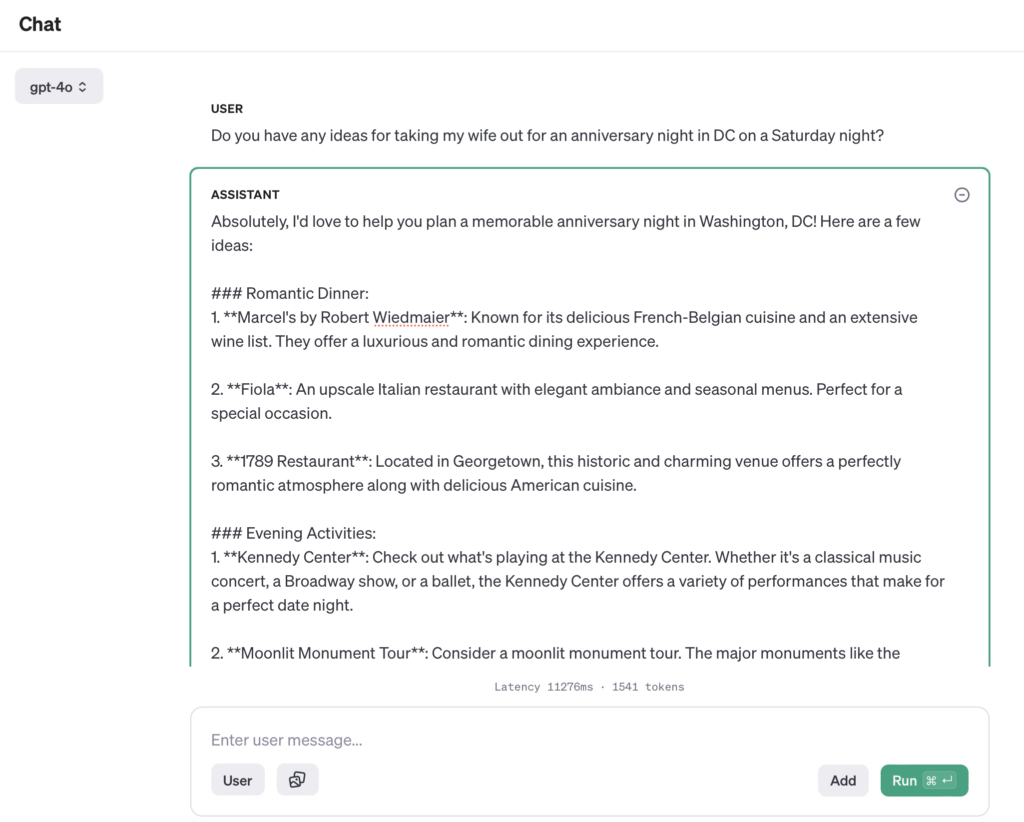

GPT-4o is only 2 weeks old, but its capabilities are remarkable. GPT-4o improves on GPT-4’s capability to generate solid text. For example – It’s my anniversary and I asked GPT-4o for anniversary ideas giving it the persona of a concierge at one of DC’s premier hotels.

The results were pretty good whether I wanted to go all out:

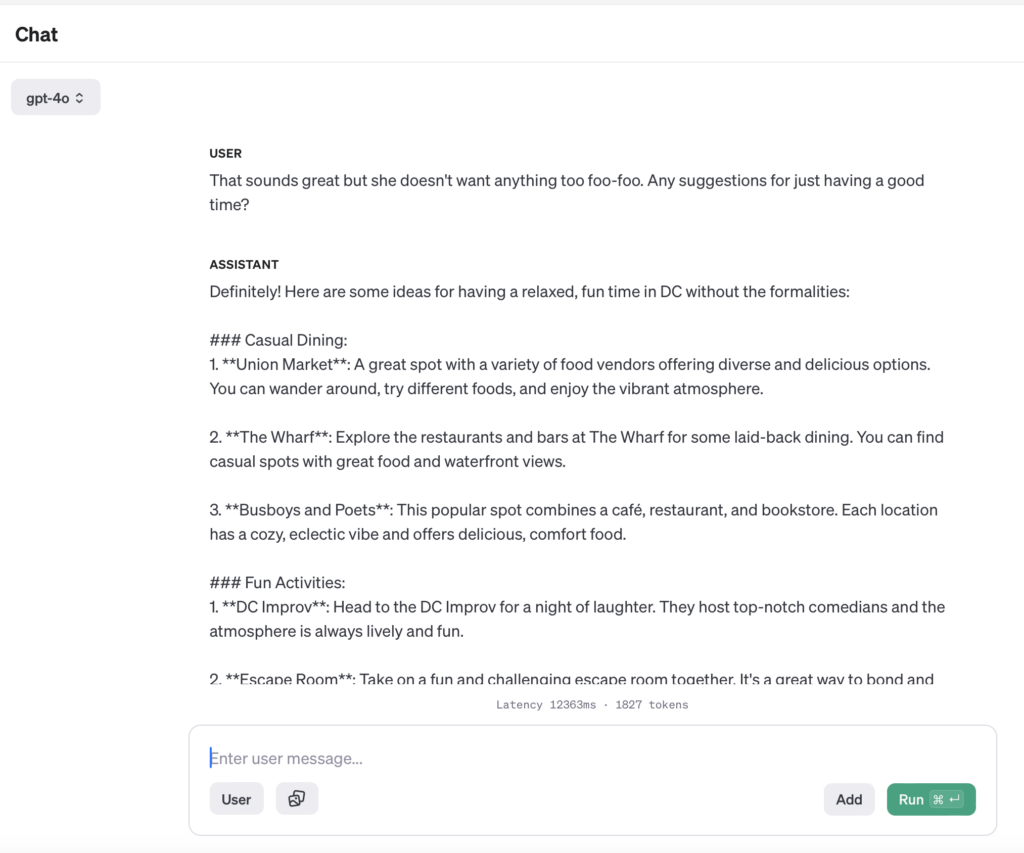

or keep things low key:

Where I think GPT-4o really shines is its interpreting capabilities. I fed GPT-4o pictures of my neighborhood and pictures of my bedroom. In both cases, the model returned detailed information of the image’s contents. What was really cool was GPT-4o wouldn’t make guesses. I had a jacket draped over a chair in my bedroom, and the angle and distance the image was captured made it hard to interpret. Instead of guessing what it was GPT-4o generalized that level of detail:

Yes, I have a small Christmas tree in a corner of my bedroom. It belongs to my wife.

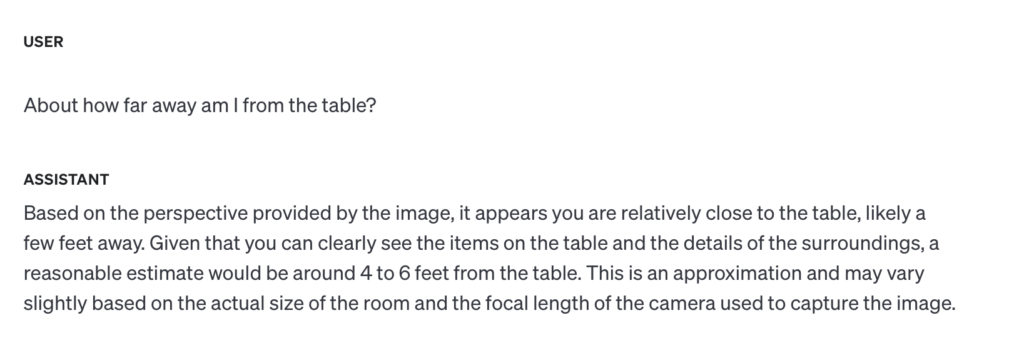

GPT-4o is good enough to give me an estimate how far away the viewer is.

I tried the same interactive with Google Gemini and didn’t get the same results. Where GPT-4o strove for accuracy, Gemini made up a context for the room I didn’t ask for. Gemini saw the Christmas tree and ran with it.

There is no couch, fireplace, nor stockings in the room.

We’re just beginning experimentation of interacting with machines instead of simply issuing commands and getting results. The capability for a machine to visually interpret the environment in an interactive way has huge equity implications for people with visibility impairments or mobility problems. The right interfaces combined with price sensitive devices can usher in a new generation of assistive devices for the elderly and disabled. Able bodied Internet influencers may have panned the AI Pin, but a repackaging of the technology with better processing and more powerful models could enhance the lives of millions of people.

GPT-4o shows how interactive assistants can deliver more capability and possibilities than generating text and responding to simple prompts. New multimodal models that can interpret data and the environment can provide new insights into our world. It’s an exciting time to be working with large language models and you can count on Qlarant being at the forefront of exploring the safest ways to integrate these exciting technologies into the program integrity marketplace.